This article explores AI transparency from multiple angles to help you understand its importance and implementation. Transparent AI systems allow users to comprehend how these technologies reach conclusions rather than functioning as mysterious “black boxes.”

Throughout this guide, we provide real-world examples, statistics, and actionable strategies to make AI transparency accessible for teams without extensive technical expertise. By the end, you’ll have a comprehensive understanding of how transparent AI creates trust, improves compliance, and leads to better business outcomes.

The Critical Role of AI Transparency in Building Trust

Imagine deploying an AI system that makes decisions affecting your customers, yet nobody can explain how those decisions are made. This black box scenario creates distrust and hesitation among stakeholders. AI transparency serves as the foundation for ethical implementation and widespread adoption of artificial intelligence technologies.

What makes AI transparent? At its core, transparent AI allows humans to understand how systems reach conclusions. Simple examples include rule-based reflex agents that follow clear if-then logic patterns, making their decision processes easy to trace and verify. More complex systems require additional transparency mechanisms to maintain accountability.

Trust remains essential for AI adoption across industries. According to Deloitte research, 95% of executives consider ethical concerns a top priority when implementing AI solutions. Organizations face numerous transparency challenges, from technical complexity to regulatory compliance requirements.

For teams without technical expertise, platforms like Cubeo AI offer no-code solutions that democratize access to transparent AI tools. These platforms enable organizations to implement ethical AI practices without extensive programming knowledge, bridging the gap between technical capabilities and practical business applications.

Understanding AI transparency and why it matters

AI transparency means we can understand, explain, and verify how AI systems reach conclusions. When teams deploy AI solutions, everyone needs clarity about system operations. This foundation of trust ensures decisions come from understandable processes rather than mysterious “black boxes.”

Think of the difference between opaque and transparent AI models as similar to getting directions. It’s almost like asking someone who explains each turn versus following a GPS that just commands “turn right” now. The first approach gives you context, while the second leaves you wondering why you’re turning.

Businesses embracing transparent AI gain significant advantages. Customer trust increases, regulatory compliance becomes easier, and teams make better decisions with systems they actually understand.

What makes AI transparent?

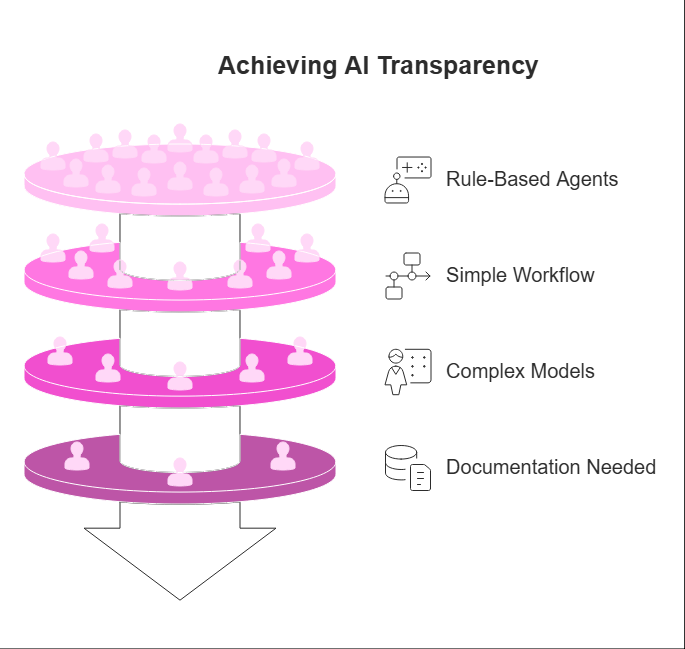

Transparency in AI systems exists on a spectrum. At one end, simple rule-based reflex agents offer clear decision logic through explicit if-then rules. Anyone can audit these straightforward systems.

These transparent agents follow a basic workflow: sense input, match a rule, take action, repeat. This creates a fully visible decision path that’s easy to inspect.

Examples of such systems range from basic chatbots to industrial controls. In fact, many of us interact with this kind of transparent AI daily without realizing it.

More complex machine learning models present greater challenges. According to research from the Open Data Institute, less than 40% of evaluated generative AI models provide clear information about their data practices. This lack of visibility creates significant barriers to trust.

For AI to be truly transparent, we need documentation of datasets, algorithms, and evaluation methods. These elements help users understand not just what the system does, but why it reaches specific conclusions.

The business case for AI transparency

Companies now recognize transparency as a competitive advantage rather than just a compliance requirement. A Deloitte survey found that 80% of executives list trust as a key AI investment driver. This focus on transparency boosts customer loyalty and reduces risks to reputation.

Organizations with transparent AI frameworks report 25% improvement in decision accuracy. Well, that’s not all: 70% of business leaders expect clear AI governance to cut compliance costs by about 30%. These numbers show real returns from prioritizing transparency.

Despite these benefits, only 40% of businesses currently audit their AI outputs systematically. This gap presents both a challenge and opportunity for teams seeking an edge through responsible AI practices.

Our approach at Cubeo AI addresses this need by making transparency implementation simpler for teams without extensive technical expertise. By focusing on clear, explainable systems, we help bridge the gap between powerful AI and understandable processes.

Key challenges organizations face in AI transparency

AI transparency presents several complex hurdles for teams across industries. We often see technical limitations, privacy concerns, and bias issues creating a perfect storm of challenges. In some respects, these obstacles make building stakeholder trust nearly impossible without strategic interventions.

The Black Box Problem with complex AI models

Modern AI systems function like mysterious black boxes. Inputs go in, outputs come out. What happens in between? That remains hidden from view.

Healthcare teams can’t explain why an algorithm flagged a specific diagnosis. Financial institutions struggle to justify loan rejections based on neural network decisions. This opacity creates serious trust issues in high-stakes environments.

Retrieval-Augmented Generation offers a promising solution to this problem. RAG systems ground AI outputs in external documents, creating clear audit trails. Studies show they reduce hallucinations by up to 60% in customer support applications. This approach helps us trace which information influenced specific AI decisions.

Data privacy versus transparency balance

We face a fundamental tension between explaining AI processes and protecting sensitive data. How can we show our work without exposing private information?

Reports indicate 65% of companies worry about leaking personal data through AI outputs. This concern appears in our analysis of workflow tools.

Data masking techniques help by concealing personally identifiable information. Anonymization transforms sensitive data into aggregated statistics.

Secure computing enclaves allow models to process confidential information safely. These approaches help us share model logic without compromising privacy requirements.

Algorithmic bias and fairness concerns

AI systems trained on biased data perpetuate existing inequalities. In fact, they sometimes amplify these problems in unexpected ways.

Facial recognition technologies show higher error rates for women and people with darker skin tones. Hiring algorithms have demonstrated preferences for candidates matching historical profiles.

These fairness issues undermine trust in our AI systems. They also expose organizations to reputational damage and legal liability.

We need diverse training datasets to address these problems effectively. Regular fairness audits help catch issues before deployment.

Continuous monitoring for discriminatory patterns must become standard practice. This proactive approach prevents unfair outcomes while building confidence in AI decision processes.

Navigating AI regulations and compliance requirements

AI regulatory frameworks evolve rapidly worldwide. A comprehensive Global AI Regulations Tracker shows jurisdictions covering 70% of global GDP now have AI-specific rules. These guidelines generally focus on transparency, accountability, and risk management, though they vary by region.

The EU AI Act and its global impact

The European Union leads global AI oversight with its comprehensive risk-based framework. This approach categorizes AI applications based on potential harm levels. High-risk systems must meet strict transparency mandates. Providers need to publish details about model logic and maintain thorough documentation.

Penalties for violations can reach €30 million or 6% of global turnover. This creates powerful motivation for businesses to prioritize transparency in their AI operations. In fact, many companies now view these requirements as competitive differentiators rather than burdens.

User rights receive special attention under these regulations. People gain access to explanations about decisions affecting them. They can also request summaries of training data used in AI systems. These protections aim to prevent harm in sensitive areas like healthcare, finance, and public services.

The EU approach creates regulatory ripple effects globally. Similar legislation now emerges across North America and Asia-Pacific regions. Organizations adopting EU-compliant practices often gain advantages through demonstrated trustworthiness. They also reduce compliance costs across multiple jurisdictions.

Building compliance into AI systems

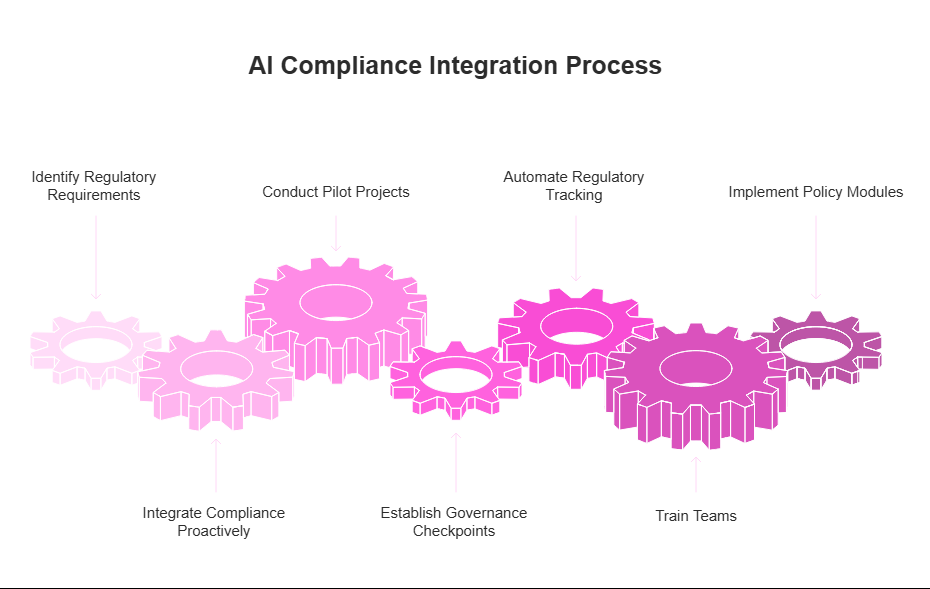

Proactive integration of regulatory requirements saves resources compared to retrofitting existing systems. About 77% of companies view AI compliance as a top priority according to recent case studies. Yet many still struggle with implementation challenges.

Strategic pilot projects help uncover hidden costs before full deployment. This addresses a common problem where 53% of leaders report unexpected expenses after implementation. Our experience shows that early testing significantly reduces these surprises.

Effective governance requires checkpoints throughout the AI lifecycle:

• Validating data collection and preparation

• Monitoring for bias during model training

• Verifying deployments with human oversight

• Auditing performance against compliance standards

Cloud platforms offer valuable tools for automating regulatory tracking. These solutions can manage compliance logs and version control with minimal effort. Regular team training ensures everyone understands governance requirements.

Well-designed policy modules simplify regulatory adherence by embedding requirements directly into workflows. This approach makes transparency accessible even for teams without specialized legal expertise. In a way, these tools transform regulations from obstacles into opportunities for building stakeholder trust.

Practical steps to implement transparent AI today

Moving from theoretical transparency to practical implementation requires accessible tools and structured processes. Organizations can actually take concrete steps to enhance AI explainability without extensive technical expertise or massive investments. The growing market for no-code AI solutions, valued at $3.83 billion in 2023 and projected to reach $24.42 billion by 2030 according to Grand View Research, demonstrates the increasing demand for accessible AI development options.

Democratizing AI with no-code platforms

No-code platforms transform AI development from a specialized technical skill to an accessible business function. These tools enable subject matter experts to create transparent AI solutions without writing code, following a structured seven-step AI development process. The approach begins with defining clear objectives, preparing quality data, and assembling cross-functional teams.

Market research indicates no-code AI platforms accounted for 76.8% of the AI development market share in 2023, highlighting their critical role in democratizing access. Companies report 64% efficiency improvements when business teams can directly customize AI solutions rather than relying solely on technical specialists.

Real-world applications span multiple departments:

• Marketing teams creating content optimization assistants

• Customer service building transparent support chatbots

• Sales departments developing lead qualification tools

• HR teams implementing candidate screening assistants

Each solution basically includes built-in transparency features like data lineage tracking and decision explanations. This makes the AI development process accessible to non-technical stakeholders while maintaining accountability.

Building transparency into AI development processes

Transparent AI development shares principles with clear content structure. Well, just as structured content with clear headings improves both human and machine comprehension, well-documented AI models enhance understanding and trust.

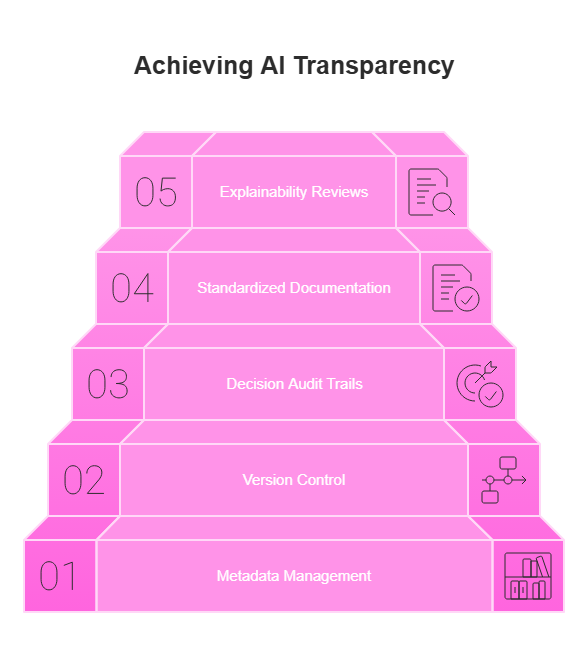

Effective transparency practices include:

- Comprehensive metadata management tracking data sources, transformations, and feature engineering decisions

- Version control systems documenting model iterations and performance changes

- Decision audit trails explaining key development choices and their rationales

- Standardized documentation templates ensuring consistent information across projects

- Regular explainability reviews testing whether outputs can be understood by stakeholders

Teams implementing these practices report smoother compliance reviews and faster debugging when issues arise. The documentation process itself often reveals potential problems before deployment, reducing costly fixes later.

In a way, it’s similar to how we approach content creation: structure and clarity benefit both humans and machines. The no-code AI market growth rate of 28.3% between 2023 and 2033 projected by A3Logics reflects how these platforms simplify transparency implementation through automated documentation and built-in explainability features.

What's next for AI transparency as technologies evolve

The future of AI transparency looks bright. We’re witnessing researchers push explainability boundaries in exciting ways. Actually, recent breakthroughs in post-hoc explanations and interactive visualization methods make complex models more understandable. These tools will transform how teams implement AI systems. Trust grows when stakeholders can follow decision paths.

Emerging standards and best practices

Standardization efforts gain momentum across industries. In fact, we see the Defense Advanced Research Projects Agency leading significant explainability research through its XAI program.

This government-backed initiative funds projects with built-in interpretability metrics. Their systems demonstrate 90% accuracy while maintaining traceable decision logs. These benchmarks establish clear guidelines for open AI implementation.

Cross-industry collaborations focus on shared evaluation frameworks. These measure clarity across different applications, kind of like creating a universal language for AI explanations.

The frameworks typically include:

• Measure feature attribution clarity with quantitative metrics

• Document models with standardized templates

• Verify systems through consistent audit criteria

• Explain model behaviors with common vocabulary

Academic research merges with practical guidelines. This creates a foundation for openness spanning both technical and business domains.

Building an organizational culture of transparent AI

Technical solutions alone won’t ensure lasting clarity. We need corresponding cultural shifts too. Innovative companies establish cross-functional AI governance committees.

These teams bring together technical, legal, and business perspectives. This collaborative approach, in a way, ensures openness permeates every development stage.

Training programs help teams understand both technical and human dimensions of explainable AI. According to legal technology trends, organizations with such programs report higher user trust. This proves especially true in sensitive applications like contract analysis.

Model update peer reviews create accountability within teams. Regular audits conducted by diverse groups help identify potential blind spots. These practices transform clarity from a technical requirement into an organizational value. Our AI initiatives benefit when guided by these principles rather than treating them as afterthoughts.

FAQs

How does algorithmic bias undermine AI fairness?

Algorithmic bias leads to unfair and incorrect decisions in AI systems. It undermines fairness and erodes trust, creating unequal outcomes. Addressing bias requires multidisciplinary efforts and ongoing research.

How can no-code platforms foster explainable AI?

No-code platforms enable building AI solutions without coding expertise. They often include explainable AI features for transparency. These platforms empower citizen developers and enhance workforce efficiency.

What future trends will shape AI transparency?

Future AI transparency will emphasize fairness and ethical considerations. Regulatory frameworks will evolve to ensure responsible AI use. Tools will become more sophisticated, integrating human oversight and adapting to new technologies.