Think of E-E-A-T in SEO like professional licensing. A doctor doesn’t just claim medical expertise once and forget about it. They maintain credentials, document continuing education, and provide evidence of their qualifications for every patient interaction (photos, interview notes, changelogs).

Your content needs the same credentialing system.

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) requires continuous proof, not just quality writing, as Google’s people-first content guidance makes clear. Most teams know this matters but lack repeatable checklists to capture proof for each claim.

The solution has 3 practical layers:

- Define what counts as proof for your content type

- Build simple checklists and file stores for photos, logs, receipts

- Scale with no-code workflows (AI agents) that capture citations and add structured metadata (schema.org) while maintaining audit logs

This framework transforms E-E-A-T from abstract concept into measurable content credibility you can audit and improve.

What E-E-A-T Really Means for Content Teams

Most teams treat E-E-A-T like a ranking checklist. That misses the point entirely.

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) measures whether your content deserves trust for important decisions. You’re building credibility signals, not gaming algorithms.

Recent developments show you’re optimizing for two audiences simultaneously. Google’s systems evaluate your E-E-A-T signals for search rankings. LLMs use retrieval heuristics that often mirror E-E-A-T principles — citation presence, clear bylines, and recent revisions — so structured proof improves the chance AI will surface your work.

This dual optimization means your evidence standards just got higher. E-E-A-T expert analysis shows how these signals now matter for both search and AI citation systems. Understanding generative AI helps content teams grasp why structured proof matters for both human readers and machine learning models.

YMYL vs General Content Requirements

Not all content carries equal risk. YMYL (Your Money or Your Life) topics affecting health, finance, or safety decisions require stronger evidence standards.

Quick YMYL classification checklist:

- Would bad advice cause financial, physical, or emotional harm?

- Does the author have documented expertise in this area?

- Are primary sources and recent data cited?

If you answer yes to question 1, you need higher E-E-A-T standards: documented expertise, cited sources, and author credentials.

Examples requiring stronger evidence: investment advice, medical recommendations, legal guidance, safety procedures.

General content about entertainment, sports, or lifestyle topics can use lighter evidence standards while maintaining quality.

Moving Past Rankings to Trust Infrastructure

Rather than chasing short-term ranking boosts, build repeatable systems like author verification, revision logs and artifact capture.

Traditional SEO focuses on temporary ranking tactics. E-E-A-T content strategy builds sustainable credibility systems that work across search engines and AI platforms.

Your trust infrastructure includes author verification, source documentation, revision tracking, and evidence artifacts. These signals help both Google’s algorithms and LLM training processes identify your content as reliable.

This approach future-proofs your content strategy. When new AI models emerge or Google updates its algorithms, strong trust infrastructure adapts automatically because it’s built on genuine credibility.

Building Your E-E-A-T Proof Framework

Your content team needs measurable proof systems, not abstract trust concepts.

Building E-E-A-T credibility requires specific evidence artifacts and trackable KPIs. Teams implementing systematic proof frameworks see measurable results: proven E-E-A-T strategies show 67% traffic increases and 43% ranking improvements for YMYL keywords within six months.

The framework has two operational components: evidence collection and measurement systems. Evidence artifacts include author credentials, citation documentation, and case study results. KPIs track citation rates, author profile completeness, and schema coverage.

Evidence Artifacts That Actually Matter

Start with 3 concrete proof categories your team can collect immediately.

Author credentials and verification: Complete author bios with professional credentials, contact information, and expertise documentation. Websites with clear author expertise rank 25% higher on average compared to anonymous content.

Citation quality and source authority: Document every claim with authoritative sources. Track primary source usage, publication dates, and domain authority scores. Store citation logs in a versioned Google Sheet with columns: source URL | claim ID | verification date | next review date.

Case study documentation and results tracking: Collect screenshots, data exports, interview recordings, and outcome measurements. Store before/after comparisons, client testimonials, and performance metrics. These artifacts prove first-hand experience and demonstrate real-world expertise.

Roles (who/what): authors supply bios and artifacts; editors verify claims and citations; content ops applies schema markup (structured metadata that tells search systems who wrote what and when), stores artifacts, and runs the weekly audit; legal/review signs off on YMYL items.

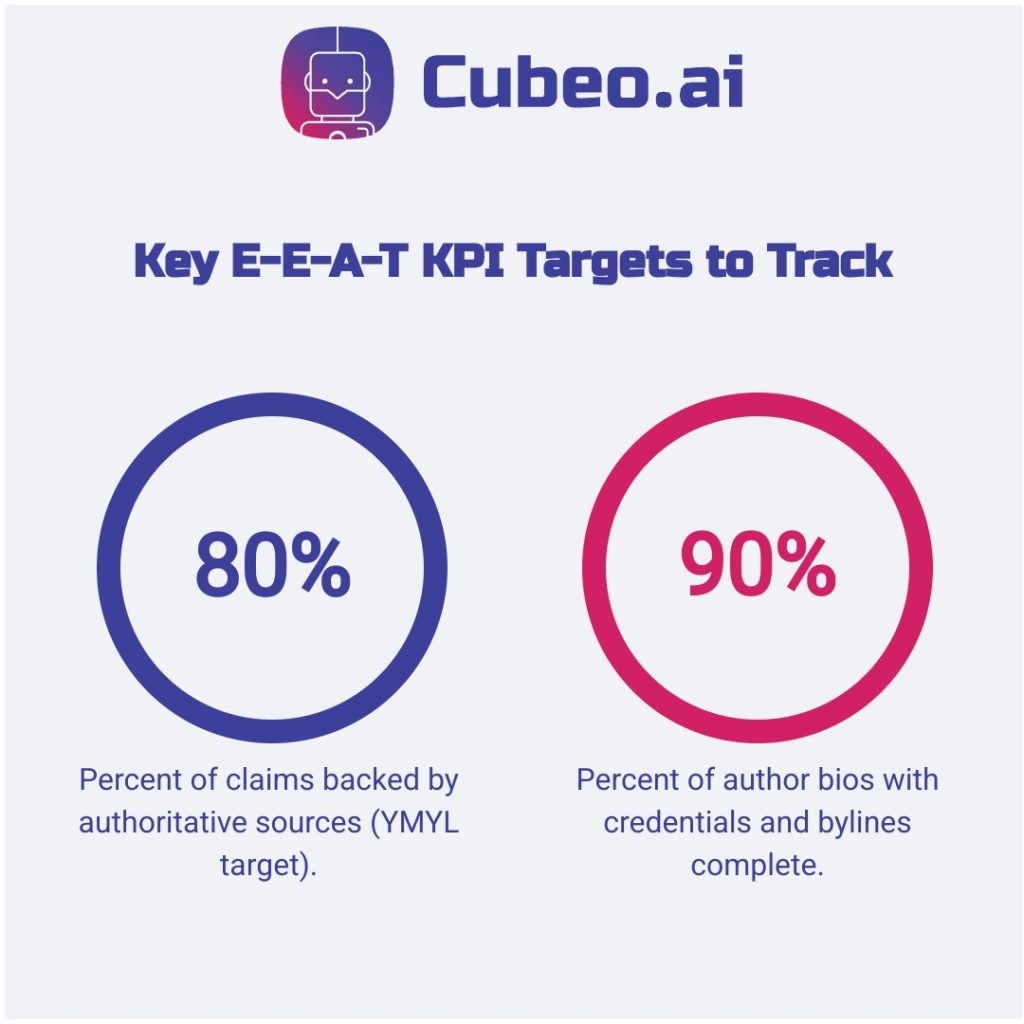

KPIs and 10-Minute Audit Recipe

Track measurable outcomes to prove your E-E-A-T investment.

Citation rate per article — percent of claims backed by authoritative sources. Target: 80% for YMYL, 60% for general.

Author profile completeness — percent of bios with verified credentials, contact info, and published bylines. Moz’s E-E-A-T guidance recommends ~90% completion.

Schema coverage — percent of pages with validated structured data (schema = machine readable metadata that tells systems who wrote what and when).

Scaling E-E-A-T with AI Agents and Governance

Manual evidence collection breaks down at scale. Use 3 agents to capture evidence automatically, then route results through governance checkpoints so editors and legal can approve before publishing.

A Governance Institute report found 90% of organizations adopted AI but struggled with governance and measurement. Content teams face the same challenge: E-E-A-T requirements grow faster than manual capacity.

Successful implementation requires automated research and citation agents, structured governance checkpoints, and LEO-ready output formatting (machine-readable headings, inline citations, and structured metadata LLMs use) that works for both search engines and AI models.

Research and Citation Capture Agents

Deploy 3 automated workflows to handle evidence collection systematically.

Source verification agent: Automatically checks domain authority, publication dates, and author credentials for cited sources. Flags low-authority sources and suggests authoritative alternatives from your approved source database. Tracks authority scores (domain rating or publisher reputation; store as a column in versioned Google Sheet or CMS audit field).

Citation formatting agent: Converts raw source URLs into properly formatted citations with metadata extraction. Captures author names, publication dates, and article titles while maintaining consistent citation styles across your content.

Real-time fact-checking integration: Cross-references claims against multiple authoritative databases and flags potential inaccuracies for human review. Maintains citation quality logs showing verification dates and source updates.

These agents integrate with content optimization workflows that structure content for both Google rankings and LLM indexing.

Governance and Human Oversight Checkpoints

Automation requires systematic quality gates to maintain E-E-A-T standards.

Stakeholder roles and review cadence: Content creators supply raw materials and context. Editors verify automated citations and approve fact-checking results. Legal teams review YMYL content before publication. Content ops manages agent configurations and audit logs.

Quality gates for automated content: All AI-generated citations require human verification before publication. YMYL topics trigger additional legal review. Schema markup validation runs automatically but requires manual spot-checks weekly.

Who/How/Why disclosure template: Who: Content ops; How: agents capture citations → editor verifies → legal signs off for YMYL; Why: maintain provenance and auditable trail for each claim. Research-backed governance frameworks emphasize stakeholder engagement and iterative refinement.

Audit logging and compliance tracking: Track agent decisions, human overrides, and revision histories in versioned logs. Run governance audits monthly to refine agent performance and update quality thresholds.

FAQ

How do I measure E-E-A-T improvement over time?

Measuring E-E-A-T improvement over time involves tracking key metrics that reflect expertise, experience, authoritativeness, and trustworthiness. While E-E-A-T isn’t a single score, you can monitor changes in search rankings, organic traffic, and user engagement. Tools like SEMrush and Ahrefs are valuable for SEO analysis, allowing you to benchmark performance and observe how E-E-A-T signals evolve.

Beyond traditional SEO metrics, consider tracking AI Overview citations, branded search volume, and industry mentions, as these can indicate growing authority and trust. E-E-A-T success often manifests as reduced ranking volatility and increased citation rates rather than immediate traffic spikes. Regularly auditing your existing content against E-E-A-T criteria and setting quantifiable targets, such as improving “proof density” on core pages, can provide a structured approach to measuring progress.

E-E-A-T is a long-term strategy, typically taking months to build genuine authority and trust signals. However, implementing proper schema markup and author attribution can yield more immediate effects. New measurement tools, like BrightEdge and Authoritas, now offer AI Overview monitoring capabilities, and custom alerts can track brand mentions across various AI platforms, providing a comprehensive view of your E-E-A-T performance.

What's the difference between E-E-A-T for YMYL and general content?

E-E-A-T, encompassing Experience, Expertise, Authoritativeness, and Trustworthiness, is crucial for all content, but its importance is significantly amplified for “Your Money or Your Life” (YMYL) topics. YMYL content directly impacts a person’s health, financial stability, or safety, such as medical, financial, or legal information. Google holds YMYL sites to much stricter E-E-A-T standards due to the potential for harm from inaccurate or misleading information.

For YMYL content, demonstrating high levels of E-E-A-T is a top priority. This means not only providing accurate information but also clearly showcasing the credentials, experience, and reliability of the authors and the website itself. For general content, while E-E-A-T is still vital for establishing credibility and ranking, the scrutiny is less intense. However, Google’s updated guidelines suggest that demonstrating experience and expertise is increasingly important across all niches, not just YMYL.

Ultimately, all websites should strive for the highest possible E-E-A-T. The higher the YMYL “degree” of your content, the more scrupulous Google will be in assessing your E-E A-T to ensure user safety. This often translates to a greater need for formal qualifications, extensive citations, and robust evidence of trustworthiness for YMYL topics, whereas practical experience and demonstrated knowledge can be equally valuable for general content.

Can AI agents handle E-E-A-T compliance automatically?

AI agents can significantly enhance E-E-A-T compliance by automating routine tasks, reducing manual workload, and ensuring continuous monitoring and enforcement of regulatory standards. These agents excel at handling repetitive, rule-based processes, such as monitoring transactions, flagging potential issues, and generating standardized reports, which minimizes human error and frees up teams for more strategic analyses.

While AI agents can speed up content creation and improve quality, they don’t entirely replace human oversight. The most effective approach combines AI efficiency with human expertise. Human review remains essential to ensure that the content truly demonstrates experience, expertise, authoritativeness, and trustworthiness, especially in nuanced or rapidly changing fields.

AI agent governance and compliance solutions can detect compliance risks, enforce guardrails, monitor agent activity and automate reporting to ensure continuous alignment with regulatory standards. This means embedding security, privacy, and governance controls directly into AI-driven workflows. However, human-in-the-loop processes, expert reviews, and structured audits are crucial to validate claims, correct errors, and ensure the context and nuances that AI might miss, ultimately delivering secure, reliable, and production-ready solutions.

What are the most common E-E-A-T mistakes content teams make?

Content teams often make several common E-E-A-T mistakes that hinder their ability to establish and demonstrate genuine authority. One prevalent error is publishing low-quality content that merely copies existing ranking content without adding unique value or depth. This includes content that lacks appropriate backlinks or fails to link out to other authoritative sources, signaling a lack of visibility or perceived authority within the niche.

Another significant mistake is misrepresenting expertise or creating fake authors. While formal qualifications are important, especially for YMYL topics, simply listing credentials without demonstrating practical application or providing evidence of experience can be ineffective. Similarly, author bios that are lackluster or missing altogether, or content published without a clear author name, undermine trustworthiness.

Other common pitfalls include not having clear and easily accessible contact information, failing to include references or links to sources within the content, and allowing content to become outdated. Excessive spammy, hateful, or false user-generated content also suggests poor site moderation, which severely damages E-E-A-T. Ultimately, many teams focus on quick E-E-A-T wins like author boxes instead of building comprehensive trust assets that compound over time, leading to a lack of “proof density” across their content.