Large language models transform how businesses operate across every department. This article explores these AI systems from fundamentals to practical applications. We’ll walk through their inner workings, real-world uses, and implementation strategies that don’t require coding expertise.

Whether you’re just starting with AI or looking to expand your current implementation, this guide provides a comprehensive overview of how these technologies create business value. Recent statistics show organizations achieve 3-10x returns within twelve months of implementation, making this technology too important to ignore.

The Business Revolution: How Large Language Models Are Transforming Industries

Large language models represent sophisticated AI systems trained on massive text datasets that generate human-like responses to prompts. These powerful tools now drive business transformation across every sector, from healthcare to finance and beyond. Companies adopting this technology report dramatic productivity gains, enhanced customer experiences, and competitive advantages previously unattainable.

The momentum behind LLMs continues accelerating at breakneck speed. According to the U.S. Bureau of Labor Statistics, jobs related to artificial intelligence and machine learning will grow by approximately 23 percent through 2032, much faster than average occupations. This explosive demand signals how critical understanding and leveraging these technologies has become for forward-thinking organizations.

Throughout this article, we’ll explore what large language models actually do, examine practical applications driving real business value, and provide actionable strategies for implementing LLMs without technical expertise. Whether you’re a seasoned executive or new to AI concepts, you’ll discover how to use LLMs to transform your operations and results.

What Are Large Language Models?

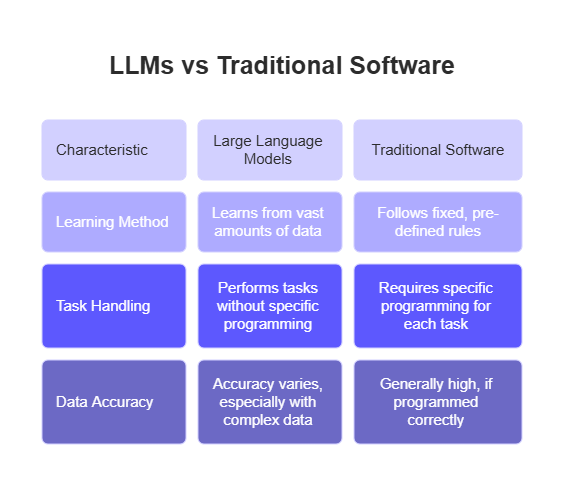

Large language models are sophisticated AI systems. They learn from trillions of words found in books, articles, and websites. Actually, these models recognize patterns between words and concepts to generate human-like text. Unlike traditional software with fixed rules, LLMs learn from examples. They can handle tasks nobody specifically programmed them to perform. We’ve seen these tools transform how businesses create content, interact with customers, and analyze unstructured data.

Where do these models fit in the tech world? Well, AI vs ML fundamentals help us understand their position. AI covers any technology mimicking human intelligence. Machine learning represents just a subset focused on pattern recognition through data exposure. LLMs operate at this intersection. Recent research shows the global LLM market will grow from $1,590 million in 2023 to $259.8 million by 2030, with a 79.80% compound annual growth rate. This rapid expansion highlights their increasing business importance.

Companies implementing these systems face practical challenges too. In fact, while 58% of organizations experiment with LLMs, only 23% plan commercial deployments. These models show just 22% accuracy when processing business data, which drops significantly for complex requests. We must consider these limitations alongside their potential benefits.

The Evolution of Language Models

Early text prediction relied on simple statistical approaches called n-grams. These methods predicted words based on limited preceding context. Neural networks later enhanced these capabilities by processing sequences more effectively.

Recurrent Neural Networks marked a significant advancement. They could, in a way, maintain memory of previous inputs. Yet they struggled with longer texts. The real breakthrough came with attention mechanisms. These allowed models to focus on relevant words regardless of position. Transformers, introduced in 2017, basically revolutionized everything by processing entire sequences simultaneously.

Key Characteristics of Modern LLMs

Today’s models feature billions or even trillions of parameters. These are, essentially, the adjustable values determining how input transforms into output. More parameters generally capture language nuances better. Context windows determine how much text these systems consider when generating responses.

Self-attention mechanisms let LLMs weigh word importance when creating outputs. This mimics how we humans focus on relevant information. Organizations can fine-tune pre-trained models for specific tasks or industries. This customization process saves both time and computational resources.

Applications of these models extend across various business functions. Customer support teams using LLMs improve satisfaction scores while reducing response times. Content teams generate high-quality material more quickly. In some respects, these practical applications make LLMs valuable operational assets despite their current limitations.

How Large Language Models Work

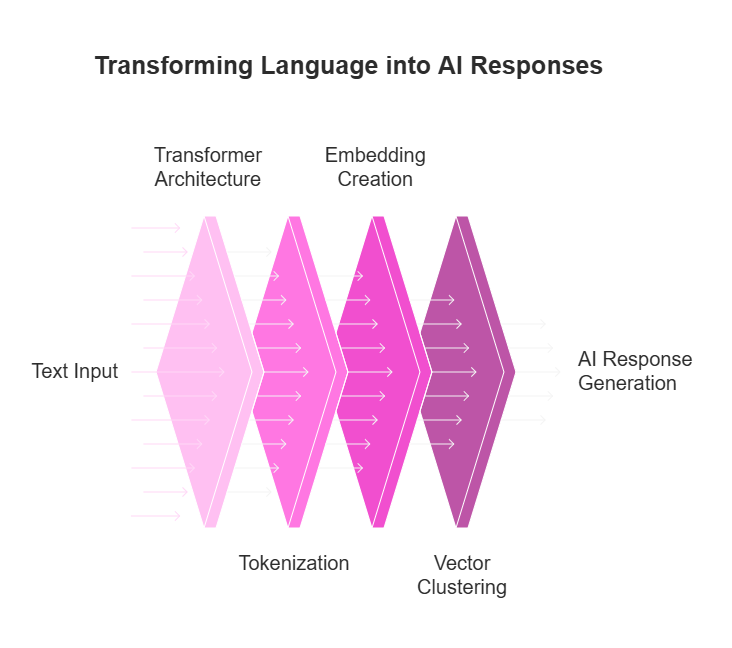

Modern AI systems respond to our questions through a fascinating blend of math and computing power. Actually, these large language models (LLMs) use what we call transformer architecture, a design that processes words all at once rather than one after another. This parallel approach helps capture connections between words regardless of where they appear in text. When we train these systems, they consume trillions of words from diverse sources.

The foundation of these models, in a way, involves converting language into numbers. Words transform into tokens and embeddings, mathematical representations that computers can process. These numerical translations create multidimensional vectors where similar concepts naturally cluster together. Sort of like a mathematical map of language, this arrangement lets models navigate between related ideas when generating responses.

Transformer Architecture Explained

At their core, transformers consist of stacked components called encoders and decoders. The real magic happens in self-attention layers where, frankly, each word connects with every other word in the input. These connections help determine how relevant words are to each other in context.

Several attention heads work simultaneously to examine different relationship aspects between words. This parallel processing, as a matter of fact, dramatically speeds up both training and inference compared to older models. We find this approach particularly effective for handling massive datasets and complex language tasks.

Training Process and Data Requirements

Creating an effective LLM starts with pretraining on diverse sources: books, articles, websites, and code. This phase requires enormous computing resources, often involving thousands of GPUs running for weeks. In some respects, a single training run for advanced models can cost millions in computing expenses.

Teams must carefully select and clean training materials to minimize biases while ensuring broad topic coverage. Models basically learn from patterns in their training data. Any limitations in this information will show up in their outputs, potentially creating blind spots for business applications.

Understanding Tokens and Embeddings

Tokenization breaks text into manageable chunks, sometimes whole words, sometimes just fragments. For example, “Democratization” might become three separate tokens depending on the system used. Each model recognizes a fixed vocabulary of these tokens.

The system converts tokens into embedding vectors, essentially lists of numbers in hundreds of dimensions. Through training, models learn to position related concepts near each other in this mathematical space. When generating text, models navigate this landscape, moving between embeddings to produce language that makes sense to us.

Introduction to Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) solves a common problem with standard LLMs, they sometimes generate incorrect information that sounds right. RAG combines language models with real-time information retrieval, allowing them to ground responses in verified sources rather than relying only on training patterns.

The process works by first searching relevant documents for information related to a query. It then uses those retrieved facts to inform the response.

Applications of Large Language Models

So, how do we actually use LLMs in everyday business operations? Recent projections show these technologies will automate nearly 50% of digital work by the end of 2025. An estimated 750 million applications will utilize LLM capabilities across various sectors. The North American market alone should reach $105.5 billion by 2030, growing at 72.17% annually, according to industry research. This rapid adoption stems from real returns in productivity and operational efficiency.

Content Creation and Summarization

We’ve found that marketing teams can pretty much transform their output using LLMs for drafting blog posts and email campaigns at scale. These tools generate variations for A/B testing while maintaining consistent brand voice. Executive teams benefit from automatic summarization of lengthy reports, extracting key insights without reading entire documents.

Customer Support and Chatbots

AI-powered chatbots now handle up to 80% of routine customer inquiries without human intervention. These systems recognize intent and provide contextually relevant answers. They seamlessly escalate complex issues to human agents when necessary. The result? Reduced wait times and 24/7 availability that improves customer satisfaction scores.

What sets modern implementations apart is, honestly, their personalization capability. By analyzing conversation history and customer data, these assistants adjust tone and recommendations to match individual preferences. Financial institutions report particular success with this approach. Personalized guidance increases both customer trust and product adoption rates.

Research and Data Analysis

Research teams across industries deploy LLMs to analyze vast document collections and proprietary databases. Vector search technology enables precise information retrieval based on meaning rather than keywords alone. This capability proves especially valuable in pharmaceutical research and legal discovery.

The key advancement lies in data-grounded responses through Retrieval Augmented Generation. By connecting LLMs to verified information sources, we ensure outputs remain factually accurate and trustworthy. Healthcare providers implement these systems to summarize patient records and identify treatment options. They can stay current with medical literature while maintaining strict accuracy requirements essential for clinical applications.

Industries Transformed by LLMs

Dramatic shifts occur in specific sectors when LLM technology enters the picture. Actually, about 67% of organizations now use generative AI products built on these models. The LLM impact spans across four key industries: sales/marketing, healthcare, finance, and education. Each field shows measurable results that boost bottom-line performance.

The global LLM market will grow from $1.59 billion in 2023 to $259.8 billion by 2030. This represents a CAGR of 79.80%, highlighting how industries LLMs touch experience rapid transformation.

Sales and Marketing Automation

Sales teams achieve higher conversion rates with AI-crafted messages. These messages speak directly to prospect pain points. In a way, this shifts prospecting from volume-based to precision-focused approaches.

Marketing teams use LLMs to score leads based on engagement patterns. They create personalized outreach for specific buyer personas. Campaign creation now happens in hours instead of weeks.

Healthcare and Finance Insights

In healthcare settings, LLMs analyze clinical trial data and identify treatment patterns. Medical professionals leverage these tools for diagnostic support. These systems serve as valuable second opinions based on symptom descriptions and patient history.

Financial institutions, too, benefit from these technologies. They deploy models for risk assessment and fraud detection. Algorithms process news events, economic indicators, and transaction patterns to flag anomalies human analysts might miss.

Education and Training

Educational institutions implement LLM-powered tutoring systems that adapt to individual learning styles. Students receive personalized feedback rather than generic responses. This approach addresses specific misunderstandings unique to each learner.

Corporate training programs follow similar principles for employee onboarding. New team members interact with knowledge bases through natural language. They receive customized guidance that addresses their specific questions without overwhelming them with irrelevant information.

The Importance of LLMs for Businesses

The business ROI from LLMs speaks for itself. Our analysis shows companies achieve 3-10x returns within just twelve months of implementation. Teams complete tasks 30% faster while quality improves by 25-40%. In a way, few technology investments deliver comparable returns across so many functions.

According to Netguru’s research on e-commerce LLM applications, 78% of major retailers now use these systems. These businesses see 32% higher conversion rates. Support tickets drop by 27%. Product launches happen 41% faster compared to traditional methods.

Productivity Enhancements

Marketing teams now create months of content in days. AI handles routine questions, so customer service staff manage 3-4x more inquiries. Data analysts, too, produce insights 5x faster with LLM-powered tools.

Cost Reduction Opportunities

Support costs actually drop 30-50% with LLM chatbots handling first-level inquiries. Document processing errors fall by 85%, which reduces expensive rework.

Competitive Advantages

Fast response to market changes creates decisive business advantages. The Stanford AI Index Report 2025 confirms organizations using LLMs make more accurate decisions. This data-driven approach results in 35% better forecasting and, in some respects, 28% improved resource allocation.

Implementing LLMs with No-Code Solutions

Organizations can now harness LLM capabilities without facing technical barriers. No-code AI solutions remove complexity from the equation. A remarkable example comes from manufacturing, where one global automaker trained 1,200 employees as citizen data scientists who deployed thousands of models across facilities, saving over 10,000 hours annually.

Our approach to implementation follows three straightforward steps: select a relevant use case, connect appropriate data sources, and configure desired outputs. Marketing professionals create campaigns in days instead of weeks with these tools. Customer service teams set up functional chatbots within hours. Meanwhile, research departments develop document analysis solutions during brief work sessions. This accessibility shifts innovation from IT-dependent projects toward business-led initiatives where everyone contributes.

Building Custom AI Assistants

Intuitive interfaces transform how we design conversation flows for AI systems. Teams simply drag elements onto digital canvases to build decision trees, craft response templates, and establish escalation paths when needed. These visual builders eliminate coding requirements while maintaining powerful functionality.

Pre-built components handle common scenarios like scheduling appointments or answering frequently asked questions. Starting with templates accelerates development significantly. Marketing groups can customize assistants to match brand voice perfectly, while sales departments build agents following proven conversion frameworks that drive results.

Training Models on Your Data

Connect LLMs directly to company-specific information through document libraries that ground responses in organizational knowledge. By uploading policies, product specifications, and customer feedback, generic models transform into specialized business tools tailored to your needs.

Fine-tuning happens without coding through user-friendly prompt engineering interfaces. Simple adjustments to parameters allow anyone to modify generation styles from highly creative to strictly conservative. Our experience shows this capability proves essential when adapting models to different business contexts and communication requirements.

Integration with Existing Workflows

Point-and-click API configuration connects AI capabilities to current systems without developer dependencies. Teams move from prototypes to production by embedding agents into websites, CRMs, and communication platforms seamlessly.

Automation triggers activate AI processes based on specific events such as form submissions or email receipts. Organizations implementing these solutions report substantial returns on investment according to recent Microsoft research. Companies average $3.70 back for every dollar invested in generative AI implementations that enhance existing workflows and business processes.

Future Developments in Large Language Models

Exciting LLM trends will reshape how we use AI daily. Recent data shows 50% of digital work will soon run through LLM applications. The future of large language models includes 750 million apps utilizing these capabilities by next year.

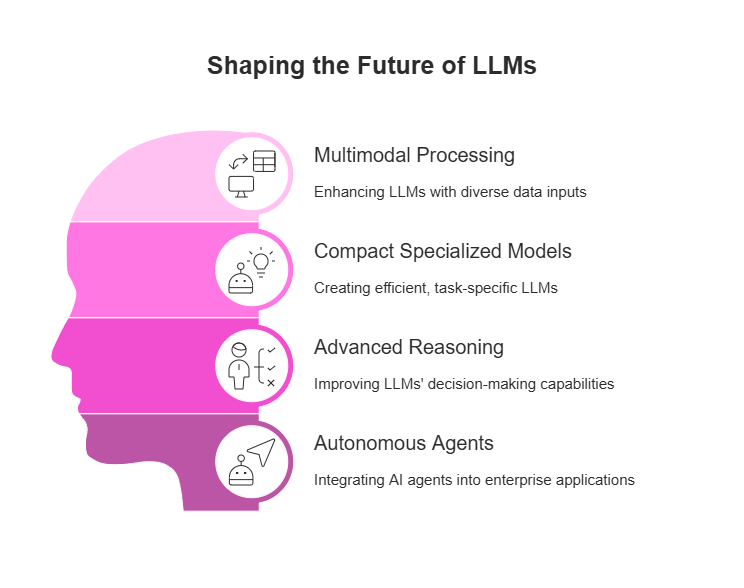

Our industry stands at a pivotal moment. Three key developments will drive innovation: multimodal processing, compact specialized models, and advanced reasoning. Experts predict that by 2028, autonomous agents will appear in 33% of enterprise applications. These agents will actually automate about 15% of work decisions.

We believe organizations embracing these technologies gain tremendous advantages in efficiency and creativity.

Multimodal Capabilities

Modern LLMs now process text, images, audio, and video together. Gemini on Cubeo AI shows this capability with its massive 2-million token window. This equals over 1,500 pages of text.

In a way, these multimodal systems create new possibilities for our clients. Marketing teams can analyze visual feedback and generate multimedia content from simple prompts. The integration happens seamlessly across channels.

Smaller More Efficient Models

Compact models deliver 90% of capabilities at just 10% of the cost. GPT-4o Mini demonstrates this with pricing at $0.15 per million input tokens. This makes AI accessible for businesses of all sizes.

These models excel at everyday tasks like drafting emails and summarizing meetings. Their smaller footprint allows deployment on edge devices. Performance remains impressive even in limited bandwidth environments.

Enhanced Reasoning Abilities

Tomorrow’s models will feature improved logical thinking. They’ll connect concepts across domains to solve complex problems. Chain-of-thought capabilities help break multi-step challenges into manageable parts.

This advancement particularly helps with financial analysis and legal research. Models become thought partners rather than simple tools. They suggest alternative approaches based on context and previous interactions.

FAQs

How do large language models process text?

Large language models utilize neural networks for understanding and generating human language. They are trained on vast datasets. Text is converted into numerical format using embeddings.

Which industries benefit most from LLMs?

Finance, healthcare, and education gain the most from large language models. These models enhance automation, personalization, and overall efficiency. LLMs offer tailored solutions using specialized knowledge across various fields.

Can non-technical teams implement LLMs without coding?

Yes, non-technical teams can implement LLMs using no-code platforms. These platforms simplify deploying large language models.

What are the key trends shaping the future of LLMs?

Key trends include improved efficiency and increased specialization. Multimodal capabilities are also a key trend. Future focus includes smaller, efficient models and ethical use.