Stop wasting weeks testing tools that never improve indexing speed or earn AI citations. This guide maps the best AI SEO tools to measurable KPIs you can test in 30 days.

Most practitioners choose tools by feature lists rather than outcomes. The result? Three weeks of testing with zero improvement in AI Overview presence (whether AI assistants surface and cite your content) or LLM citation rates (getting quoted by ChatGPT, Claude, Perplexity).

You need a selection framework that distinguishes traditional SEO from LEO (Large Language Model Optimization). LEO is how AI assistants find and quote your content when answering user questions.

This framework gives you one decision tree and starter stacks mapped to specific KPIs: indexing time, AI Overview visibility, or content velocity. Teams using KPI-driven selection see measurable pickup signals within their first month.

LEO optimization strategies are becoming as critical as traditional SEO because AI models now influence content discovery at scale.

Ready to build a stack that moves your metrics? Let’s start with the framework.

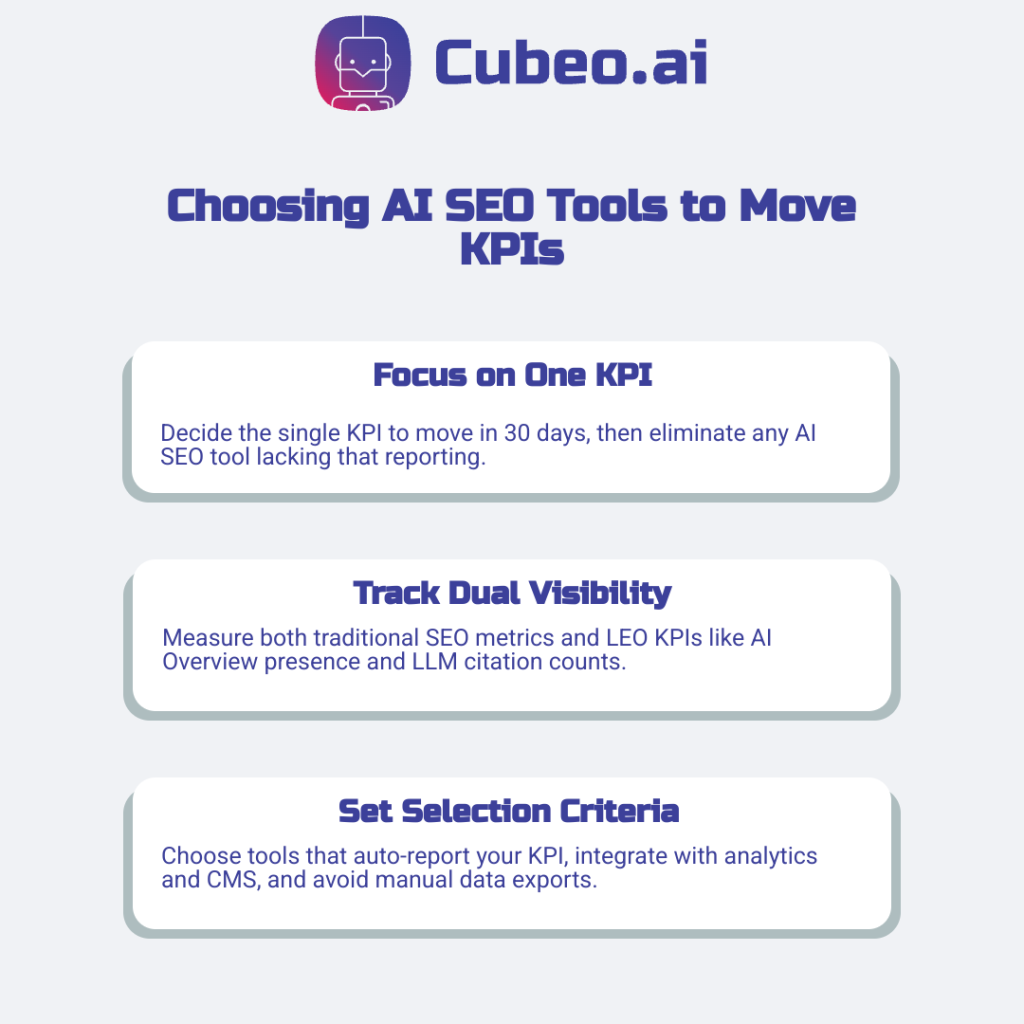

How to Choose AI SEO Tools That Move KPIs

You track rankings while competitors win AI Overviews because tools were chosen for features, not outcomes. Decide the one KPI you’ll move in 30 days first, then eliminate tools that can’t report it.

Measure both visibility types:

- Traditional SEO: Organic traffic, indexing speed, rankings

- LEO KPIs: AI Overview presence, LLM citation count, embedding relevance (how well content maps to AI model understanding), AI assistant traffic share

Real baseline: One agency achieved 4,162% organic growth and 10.5M impressions using KPI-driven selection, with 5% of traffic from AI search agents converting at higher rates.

Target for testing: Aim for detectable AI Overview presence or 10-20% indexing speed improvement within 30 days. The Semrush AI mode study provides correlation baselines between traditional rankings and LLM visibility for realistic target setting.

Tool selection criteria:

- Reports your chosen KPI automatically

- Integrates with existing analytics and CMS

- Avoids manual data exports

Industry analysis identifies 12 emerging AI KPIs including chunk retrieval frequency (how often AI systems pull your content pieces). Start with simpler metrics if you lack dedicated analysts.

AI SEO Stacks by Function and Maturity

Decide the one KPI you want to move in 30 days (indexing speed, AI Overview presence, or content velocity), then use the stack below that targets it.

Most teams buy individual AI tools and create more work than they save. The solution lies in integrated stacks where tools share data and trigger actions automatically.

Modern AI SEO stacks organize into three core functions:

Content Optimization Stack: Research, writing, and optimization tools working together. These handle keyword clustering using DataForSEO SERP API for accurate volume and difficulty data, plus content generation and on-page optimization.

Technical SEO Stack: Crawling, indexing, and performance automation. AI handles sitemap generation, schema markup, and crawl budget optimization without manual steps.

Analytics and Measurement Stack: Tracking, reporting, and insights connecting traditional SEO metrics with AI visibility data.

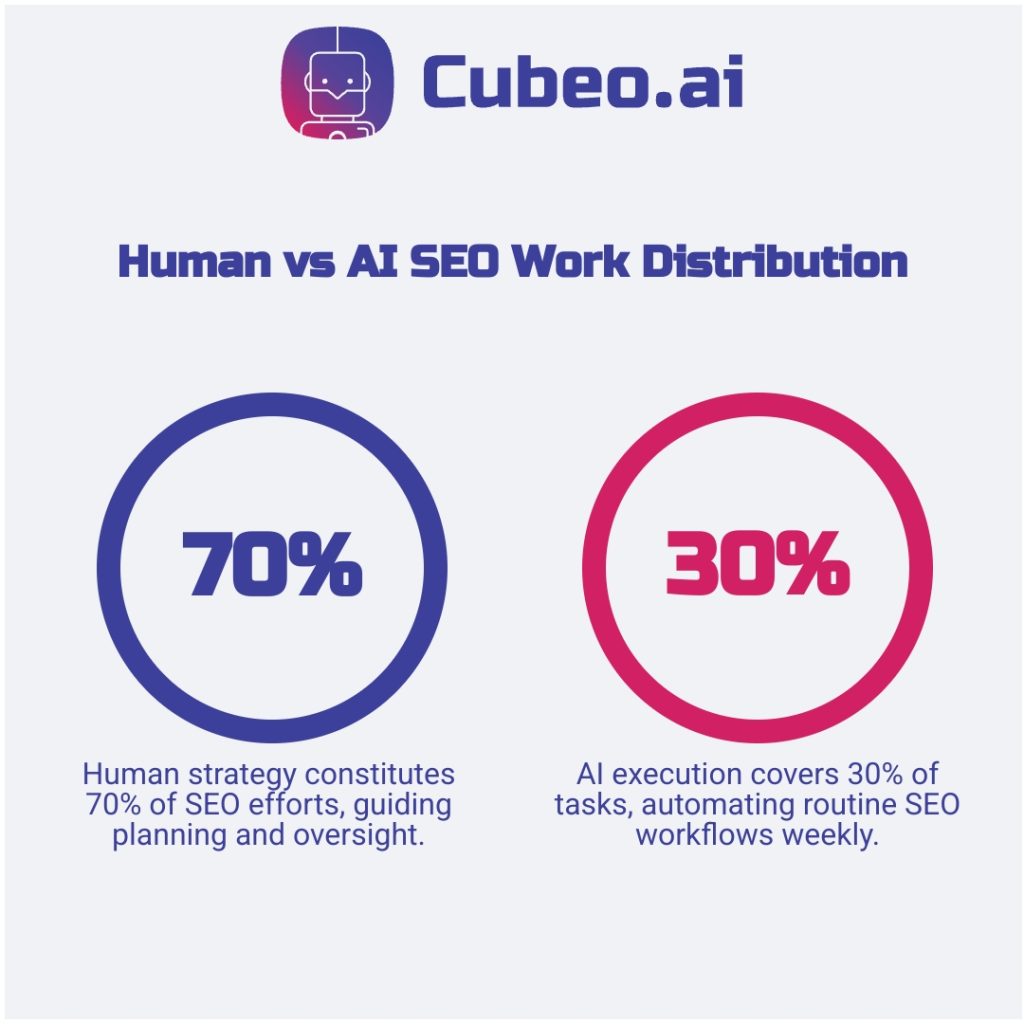

The efficiency gains are measurable. AI SEO tools save marketers 12.5 hours weekly on routine tasks, with experts suggesting the current balance runs roughly 70% human strategy and 30% AI execution.

Stack Templates by KPI Focus:

- Starter (1-3 people): Content-first tools plus CMS connections. KPI focus: Content velocity. 30-day test: Publish 5 optimized posts, measure average time-to-index.

- Scale (4-10 people): Multi-tool platforms with automated handoffs. KPI focus: Indexing speed. 30-day test: Automate index requests for 10 pages, track time reduction.

- Enterprise (10+ people): Full stack integration with advanced analytics. KPI focus: AI Overview presence. 30-day test: Deploy schema automation, measure AI citation changes.

Example workflow: Publish content → auto-add schema + update sitemap → send index request → analytics flags pages needing refresh.

Matching Tools to Team Size and Maturity

The biggest mistake is buying for future scale instead of current capacity. If you can’t staff it, a tool becomes overhead.

SMB reality: Only 40% of SMBs have websites and 72% say SEO matters, yet 54% run marketing solo and 36% cite cost as a barrier.

Agency requirements: You must manage many clients efficiently. Demand multi-domain support, branded reports, team collaboration, and predictable per-client pricing. One case showed a 74% increase in visits and 83% more calls after selecting the right scalable tools.

Enterprise considerations: Require API access (programming interfaces connecting tools to existing systems), custom integrations, and compliance controls so SEO systems plug into data warehouses and governance frameworks.

Maturity progression: Start simple, prove impact, then add specialization and orchestration capabilities as expertise grows.

Explore curated tool directories filtered by team size and budget for a fast shortlist beyond these main categories.

A Simple Decision Tree to Pick Your AI SEO Stack

Analysis paralysis kills more AI SEO projects than poor tools. Teams spend months evaluating instead of running pilots and measuring impact.

Step 1: Define Primary KPI: Choose one KPI from your framework: organic traffic, indexing speed, or LLM visibility (how often AI assistants find and quote your content). Teams following systematic decision frameworks see measurable outcomes. Rocky Brands reported 74% revenue growth and Xponent21 reported 4,162% organic growth after aligning tools to a single KPI.

Step 2: Assess Team Capability: Choose tools your team can operate today, not what you might hire for later. Solo operators need different solutions than teams with dedicated technical resources.

Step 3: Set Budget Parameters: AI SEO investments typically range from $49 to $2,000 per month and deliver 4 to 7x ROI. Use these bands to set pilot budgets and factor in integration requirements with existing systems.

Step 4: Match and Pilot: Select a starter stack matching your KPI, team size, and budget. Run pilots lasting two to four weeks measuring your chosen KPI.

Test one stack thoroughly rather than sampling many superficially.

Measuring Success with AI Overviews and LLM Citations

Track four practical metrics and run a 30-day pilot so you know your stack actually moves KPIs.

Your measurement checklist:

- AI Overview appearances – Weekly count of your content in Google’s AI-generated answer boxes. With 83% of companies prioritizing AI, this visibility matters. Target: detectable appearance within 30 days.

- LLM citation frequency – Run weekly queries on ChatGPT, Claude, and Perplexity to track when they quote your content. 86% of SEO professionals using AI see better results from systematic tracking. Target: rising citation trend over 30 days.

- Indexing speed – Measure publish-to-indexed time using Search Console. Target: 10- 20% faster than baseline.

- LEO referral tracking – Tag test pages with UTMs and use analytics to isolate AI assistant referrals (LEO = Large Language Model Engine Optimization, meaning AI assistant recommendations). Target: measurable referral traffic.

Follow Google’s official guidance for AI Overview optimization and measurement. Aim for one clear signal within 30 days.